6 April 2015. Engineers from Rice University in Houston wrote a series of algorithms that make it possible to calculate blood volume from facial video images rather than attaching a device to a person’s skin. The team from Rice’s Scalable Health Initiative that examines applications of technology to improve the conduct of health care, published its findings in the 1 May 2015 issue of the journal Biomedical Optics Express, published by The Optical Society.

The team, led by Rice graduate student and first author Mayank Kumar, is seeking techniques to measure an individual’s health status using non-contact methods. In situations such as new born infants, measuring vital signs such as pulse rate or blood pressure with routine medical devices that contact the skin could apply too much pressure and harm the patient.

In this case, Kumar and colleagues examined blood volume pulse, a measure of heart rate variability and an indicator of overall cardiac health. One result of changing blood volume is minute changes in skin color, with a method available known as photoplethysmography or PPG that measures physiological processes based on changes in skin tone.

Techniques that apply imaging to PPG for measuring skin tone changes up to now, say the authors, work for people with fair skin, sitting motionless in well-lit surroundings. When applied to individuals with darker skin, or people moving even slightly or in shadows, the techniques are less effective, and thus less useful for day-to-day health care needs.

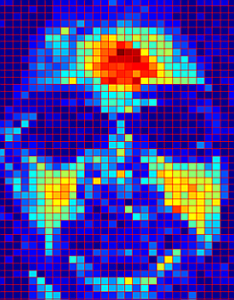

The Rice engineers set out to develop methods for measuring changes in skin tone for a wider range of facial types and colors, as well as people in motion and less-than-perfect lighting. A key discovery was that light reflects somewhat differently from various regions of the face, As a result, their technique, called DistancePPG, computes changes in skin tone from different parts of the face, and calculates a weighted average. This weighted average “improved the accuracy of derived vital signs,” says Kumar in an Optical Society statement, “rapidly expanding the scope, viability, reach and utility of camera-based vital sign monitoring.”

Another challenge was accounting for motion, where reflections of light reflections can change as an individual’s face moves in relation to the source of light. To address these conditions, the researchers devised an algorithm for DistancePPG that identifies key facial features — e.g., eyes, nose, and mouth — and tracks their positions across video frames.

Kumar and colleagues tested the DistancePPG algorithms with groups of volunteers having a range of skin colors from fair to dark, with faces of volunteers videoed during common day-to-day activities. Measurements of skin tone changes and estimates of blood volume pulse were compared to simultaneous measurements made by pulse oximeters attached to the individuals’ earlobes.

The researchers report getting mixed results from their tests. Their findings show DistancePPG returns generally accurate readings of blood volume pulse during quiet activities, such as reading or watching videos. The technique is less reliable in measuring blood volume when individuals are making larger scale movements, such as when talking or smiling, that change more dramatically reflected light off faces.

The Rice engineers plan to refine their algorithms to better account for facial movements. Nonetheless, the researchers say a patent is pending on DistancePPG technology, which they foresee can eventually be applied in non-hospital settings, such as apps using smartphone cameras to report an individual’s vital signs.

Read more:

- Inexpensive Test Bests PSA for Prostate Cancer Screening

- Mobile App in Development to Manage COPD

- Hand-Held DNA Sequencer IDs Bacteria, Viruses

- Sensor-Bandage Device Detects Early Forming Bedsores

- Pen-Dispensed Bio-Inks Developed for On-Demand Sensors

* * *

RSS - Posts

RSS - Posts

You must be logged in to post a comment.