23 May 2018. An engineering team designed a faster and more generic process for translating neuromuscular signals into computer controls for prosthetic hands than current machine-learning models. Researchers from the joint biomedical engineering program at North Carolina State University in Raleigh and the University of North Carolina at Chapel Hill describe their techniques in the 18 May early-access issue of IEEE Transactions on Neural Systems and Rehabilitation Engineering (paid subscription required).

Today’s computerized controls for prosthetic limbs and hands often rely on models derived from machine learning, a form of artificial intelligence. These algorithms for controlling prosthetics are learned from patterns in the muscle activity of individual users, which are then translated into commands for the devices, an often time-consuming process. The team led by biomedical engineering professor He (Helen) Huang are seeking a faster and simpler method for developing computer controls that could also be applied to a wide range of users with prosthetic hands.

Huang notes in a university statement that “every time you change your posture, your neuromuscular signals for generating the same hand/wrist motion change. So relying solely on machine learning means teaching the device to do the same thing multiple times.” Instead of machine learning, the researchers devised a generic musculoskeletal model.

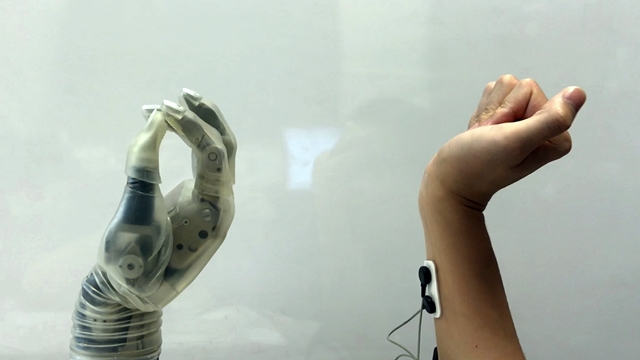

“When someone loses a hand, their brain is networked as if the hand is still there,” Huang adds. “So, if someone wants to pick up a glass of water, the brain still sends those signals to the forearm. We use sensors to pick up those signals and then convey that data to a computer, where it is fed into a virtual musculoskeletal model. The model takes the place of the muscles, joints, and bones, calculating the movements that would take place if the hand and wrist were still whole.”

The team from Huang’s Neuromuscular Rehabilitation Engineering Lab based the new model on hand and arm movements of 6 able-bodied volunteers with sensors generating data on movements of their wrists, hands, and forearms. The participants performed specific tasks demonstrating different postures of their hands and arms, with the data also used for machine-learning algorithms to control prosthetic hands. The same 6 volunteers and a transradial or hand amputee tested a prosthetic hand controller using the generic model, as well as individual machine-learning models customized for each participant, with minimal training in both cases.

The results show the participants completed assigned hand-wrist posture tasks with controllers using the generic model in about the same amount of time as machine learning models. Controllers with the generic model also had somewhat more accurate performance and operated more efficiently. However, the authors report the able-bodied participants generally performed the tasks better than the amputee.

“By incorporating our knowledge of the biological processes behind generating movement,” says Huang, “we were able to produce a novel neural interface for prosthetics that is generic to multiple users, including an amputee in this study, and is reliable across different arm postures.” The team is seeking more transradial amputees to test the model with prosthetic hands performing day-to-day chores, before planning clinical trials.

More from Science & Enterprise:

- Trial to Test 3-D Printed Prosthetic Arms for Kids

- Wearable Devices Explored to Detect Emotional States

- Virtual Reality Harnessed for Stroke Rehab

- Grant Funds A.I. Cognitive Change Rehab Study

- Brain Wave Data Harnessed for Open-Source Brain Model

* * *

RSS - Posts

RSS - Posts

You must be logged in to post a comment.