26 April 2017. Computer scientists at Georgia Institute of Technology developed a process that simplifies remote-controlled robotic grasping movements for end users. The team from the robotics lab of Sonia Chernova in Atlanta described their methods and results of tests at this year’s Conference on Human-Robot Interaction in March, in Vienna, Austria.

The Georgia Tech researchers are seeking ways of making robotics easier and more intuitive for home and small-business users. With current technology, controlling a robotic arm and gripper uses a split-screen visual presentation, where the end-user controls the machinery with a mouse. Users must manipulate the arm and gripper with a ring-and-arrow display for each of the three dimensions, to get the gripper in its proper position, then grasp the target object. Robotics experts are familiar and comfortable with this method, but it takes practice for novices to master the technique, and would likely be an obstacle for many home users, such as older individuals needing assistance.

“Instead of a series of rotations, lowering and raising arrows, adjusting the grip and guessing the correct depth of field,” says Chernova in a Georgia Tech statement, “we’ve shortened the process to just two clicks.” The process devised by the researchers replaces the split screen with a single display, where the end-user points at the object then decides on the grasping motion.

The new method, developed by Ph.D. candidate David Kent, uses computer vision and 3-D mapping algorithms that analyze the surface geography to determine the position of the gripper. The software then moves the arm and gripper in place and presents the user with a choice of grasping motions to complete the task. Thus, the user is relieved of manipulating the arm and gripper, focusing only on the end result.

Chernova describes the system’s operation with retrieving a bottle as an example. “The robot can analyze the geometry of shapes,” she notes, “including making assumptions about small regions where the camera can’t see, such as the back of a bottle. Our brains do this on their own — we correctly predict that the back of a bottle cap is as round as what we can see in the front. In this work, we are leveraging the robot’s ability to do the same thing to make it possible to simply tell the robot which object you want to be picked up.”

The researchers tested the system with student volunteers, giving them a series of grasping tasks to be performed with either the conventional split-screen display or new single-screen, point-and-click presentation. Participants using the point-and-click technique performed the tasks about 2 minutes faster on average than the conventional method. Also, participants using the conventional technique made an average of 4 errors per task, compared to 1 error for point-and-click users.

The developers believe their software can be incorporated into assistive robotics for older individuals or those with disabilities, but could also be used in search-and-rescue systems and with astronauts in space. The remote manipulation and agile grasping software modules are released as open-source software.

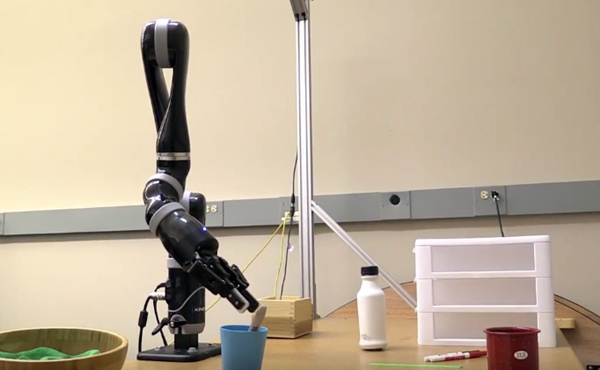

In the following video, the research team shows the software in action with a robotic device.

- Neural Technology Research Centers Launched

- Medical Robotics Company Crowdfunding IPO

- Ford Investing $1B in Artificial Intelligence Start-Up

- Human-Like Soft Touch Robotic Sensors Developed

- Spinal Cord Injury Rehab Device in Development

* * *

RSS - Posts

RSS - Posts

[…] Algorithms Simplify, Improve Robot Grasping […]