Subscribe for email alerts

Donate to Science & Enterprise

|

By Alan, on November 30th, 2023  Embrace2 smart watch (Andres Babic, Empatica Inc.) 30 Nov. 2023. A developer of medical monitoring systems with data from wearable devices will soon start asking individuals with epilepsy to offer data for an algorithm that predicts seizures. Empatica Inc. in Boston today announced the study that aims to capture evidence from people with epilepsy in the general population, for an algorithm demonstrated earlier with a few participants.

Empatica designs and markets wearable devices for research, clinical trials, and monitoring health conditions. One of its devices is a smart watch called Embrace2 for people with epilepsy that detects possible convulsive seizures, including during sleep. Epilepsy is a neurological condition affecting more than one percent of the U.S. population causing recurring seizures. Those seizures can range from brief loss of awareness to muscular twitching or shaking and loss of consciousness called convulsions. The Empatica seizure-detector watch, cleared by FDA, detects changes in electrical activity on the skin, and connects wirelessly to a phone app that alerts parents or caregivers of a seizure, as well as another diary-style app that shows patterns of seizures.

Until recently, forecasting rather than detecting the onset of seizures required capturing data with an electroencephalogram or EEG that requires wearing a helmet-like device with electrodes attached to the scalp. In Nov. 2021, a team from the Mayo Clinic and other institutions reported on an algorithm that processes data captured from a clinical-grade Empatica watch. This device detects changes in several physiological indicators in addition to electrical activity on the skin. The team developed a recurrent neural network, a machine-learning algorithm that processes data in sequence over time, which accurately predicted seizures in five of six subjects with epilepsy.

Physiological and lifestyle indicators, and seizure experiences

The new initiative seeks to validate the earlier proof-of-concept findings, as well as further develop the algorithm with data and experiences from the general population of people with epilepsy. The study expects to capture data from participating individuals with an Empatica watch and app, measuring several physiological and lifestyle indicators, along with their seizure experiences. Empatica expects to begin enrolling participants in Jan. 2024, but interested individuals can pre-register on the company web site.

“Seizure forecasting has long been among the most-requested features for people with epilepsy,” says Rosalind Picard, Empatica co-founder and chief scientist in a company statement released through BusinessWire. Picard adds, “Patients with epilepsy understand the toll that uncertainty around seizures takes and we hope that this study will help give them better control over their life, reducing stress and perhaps also enabling early interventions that ultimately reduce or prevent seizures from happening.”

Empatica is spun-off from the Media Lab at Massachusetts Institute of Technology, where Picard is director of affective computing research. Science & Enterprise reported most recently on the company in Nov. 2022, when FDA cleared Empatica’s continuous patient monitoring system that collects real-time data on four digital health indicators for home patient care.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

By Alan, on November 29th, 2023  (Bruno Aguirre, Unsplash. https://unsplash.com/photos/couple-sitting-on-the-bench-uLMEcr1O-1I) 29 Nov. 2023. A new challenge competition from XPrize calls for proactive and accessible health care solutions for improving the quality of life among older people worldwide. The XPrize Healthspan challenge, announced today at the Global Healthspan Summit in Riyadh, Saudi Arabia, expects to award up to $101 million to the winning entry, with pre-registration and public comment periods now open.

The XPrize Healthspan competition aims to close the gap between longer lifespans and the quality of life experienced by people as they age. While global life expectancy has increased in recent years, people in their later years are experience more chronic disease and disability, adding misery to their lives and trillions of dollars in health care costs for medical care. XPrize cites data showing the percentage of people worldwide age 60 and older is expected to double to 22 percent by 2050, creating an urgent need for healthier aging solutions.

The challenge is asking participants to design and develop treatments that restore muscle, brain, and immune functions lost to degradation from aging, in either a single therapeutic or combination of therapies. Proposed therapeutics should restore at least 10 years of functionality to older individuals, with an eventual goal of 20 years. The competition runs for seven years to 2030, to allow for development and clinical trials, but with intermediate milestone assessments and awards in 2025 and 2026.

XPrize is using a challenge to break through obstacles in health care innovation, such as arbitrary organizational or discipline silos, regulatory barriers, long development timetables particularly for clinical trials, lack of personalized options, and disparities in accessing new technologies. The competition organizers expect participating teams to propose solutions reflecting advances in health care science and technology from multiple fields.

Prize amounts scaled to longer restoration of functions

“By targeting aging with a single or combination of therapeutic treatments,” says founder and executive chairman Peter Diamandis in an XPrize statement, “it may be possible to restore function lost to age-related degradation of multiple organ systems.” Diamandis adds, “Converging exponential technologies such as A.I., epigenetics, gene therapy, cellular medicine, and sensors are allowing us to understand why we age. It’s time to revolutionize the way we age. Working across all sectors, we can democratize health and create a future where healthy aging is accessible for everyone and full of potential.”

XPrize plans to award prizes of $61 million to a team that develops one or more treatments restoring muscular, cognitive, and immune functions of 10 years, with a $71 million prize for restoring those functions to 15 years, and $81 million for a 20-year functional restoration. Winning teams are expected to deliver their proposed solutions in a year or less. Forty semi-finalists are expected to divide $10 million in prizes in 2025, with another $10 million in milestone prizes awarded to 10 finalists in 2026-27.

One of the challenge sponsors is Solve FSHD, a venture philanthropy and advocacy organization for facioscapulohumeral muscular dystrophy or FSHD, a type of muscular dystrophy with progressive muscle degeneration and weakness, but no cure. Solve FSHD is offering a separate $10 million bonus award, in parallel with the main competition, for treatments that improve muscle functions in people with the disease for at least 10 years.

Preliminary XPrize Healthspan challenge guidelines are now available for download, with pre-registration and public comments taken through June 2024. Final guidelines and full registration is expected to begin in July 2024.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

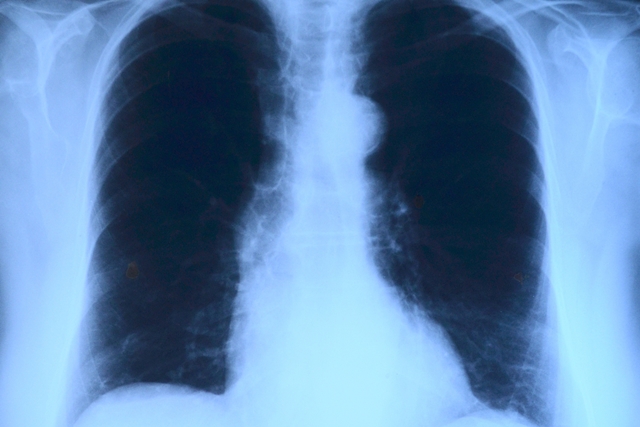

By Alan, on November 28th, 2023  Lung x-ray (Toubibe, Pixabay. https://pixabay.com/photos/x-ray-image-roentgen-thorax-568241/ 28 Nov. 2023. A developer of messenger RNA therapies for respiratory and rare diseases says it received clearance to begin a clinical trial in the U.K. for an inhaled treatment for viral lung conditions. Ethris GmbH in Munich, Germany says the Medicines and Healthcare Regulatory Agency or MHRA in the U.K. authorized a clinical trial of the company’s experimental drug code-named ETH47.

Ethris is a biotechnology company that creates therapies with synthetic messenger ribonucleic acid or mRNA, a single-stranded nucleic acid that carries instructions for making proteins from genetic codes in DNA to the nucleus of cells. In its natural state, says Ethris, mRNA is unstable in the body and can cause immune reactions. As a result, the company devised a technology for stabilized non-immunogenic mRNA, or SNIM-RNA, that it says overcomes these obstacles. Ethris says its mRNA treatments can be given repeatedly to patients to enable sustained production of therapeutic proteins in the body, or replace missing proteins.

SNIM-RNA therapies, says the company, are delivered with lipid or natural oil nanoscale particles that can cross cell membranes. Ethris says its lipid nanoparticle process makes it possible to administer mRNA treatments with aerosols to the upper and lower respiratory system. The company says it also can produce its treatments in freeze-dried form, which along with aerosol formulations, remain usable at room temperatures.

Proteins with immune defense against viruses

ETH47 is Ethris’s lead product, designed to treat viral infections in the respiratory tract. The company says ETH47 can be formulated as a nasal spray or given with an inhaler directly to the lungs. Ethris says ETH47 delivers mRNA with instructions to generate type 3 interferons, proteins providing an immune defense against viruses. Once in respiratory mucous membrane cells, says the company, ETH47 induces an innate immune response to stop viral entry and replication. Because ETH47 is designed to work independently of specific viruses, says Ethris, it can address a range of viral infections, including those triggering attacks from asthma.

The early-stage clinical trial authorized by MHRA is expected to enroll healthy participants for testing ETH47, and begin in December 2023. No further details about the study were disclosed.

“The trial start will be our first program to enter the clinic,” says Ethris CEO Carsten Rudolph in a company statement, adding this phase of ETH47’s development “brings us a step closer to providing innovative solutions that address the unmet need of respiratory viral infections, especially for the vulnerable population or patients with an underlying respiratory disease e.g., asthma or COPD.”

The company’s other programs are developing drugs for rare diseases with inhaled therapies, injections, and implanted treatments, as well as vaccines. In Sept. 2023, researchers at Ethris published a study in Nature Biomedical Engineering with colleagues from DIOSynVax in Cambridge, U.K. demonstrating in lab mice a vaccine protecting against a broad range of sarbecoviruses that include the zoonotic viruses responsible for Covid-19 infections and other pandemics.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

By Alan, on November 27th, 2023  High-density micro-array patch applied to the upper arm (Vaxxas) 27 Nov. 2023. A vaccine delivered with a patch device is shown in a clinical trial to generate neutralizing antibodies against measles and rubella similar to conventional injections. Results of the trial, conducted among healthy adult volunteers in Australia and reported on 17 Nov. 2023 in the journal MDPI Vaccines, also show the patch-delivered vaccine is safe and well tolerated.

Measles is a highly contagious viral disease causing skin rash, coughing, and sneezing, but largely controlled in some parts of the world through widespread childhood vaccination. Where vaccinations are not readily available, often in lower-resource regions, measles is still a threat to children, and according to the the Measles and Rubella Partnership, claimed 128,000 lives in 2021. Rubella, sometimes called German measles, is a milder form of the disease, but can lead to birth defects among pregnant women, including children with congenital rubella syndrome, a disease causing multiple birth defects.

Vaxxas, a biotechnology company in Cambridge, Mass. and Brisbane, Australia, is developing a patch-applicator device as an alternative delivery vehicle for vaccines. The Vaxxas device uses high-density microscale needles on a small patch, with the needles about 0.25 millimeters in length that do not cause pain, delivered in a single-use spring-loaded applicator. The company says the needles are coated with the vaccine and penetrate to only the outer skin layers, enough to alert the immune system that carries the vaccine to lymph nodes for invoking a general immune response. And Vaxxas says vaccines on the patch can be stored at ambient temperatures without refrigeration.

Science & Enterprise reported on clinical trials of the Vaxxas patch with a Covid-19 vaccine, showing the vaccine delivered with the device is well-tolerated and generates an immune response similar to conventional injections. The company is also testing the patch with a commercial influenza vaccine, and developing another device to deliver a typhoid vaccine. Results from a separate trial support the feasibility of individuals self-administering vaccines with a micro-needle patch, without the supervision of a clinician.

Comparable antibody production rates as injections

In the new early-stage trial, Vaxxas enrolled 63 healthy adult volunteers in Australia to test the patch device with a vaccine against measles and rubella. Participants were randomly assigned to received a patch with either a high or low dose of the vaccine, a placebo patch, or an approved measles-rubella vaccine given with an injection under the skin. After 28 days, the authors report that compared to the beginning of the trial, low-dose vaccine patches generated neutralizing antibodies against measles and rubella at about the same rate (38%) as the conventional vaccine (36%), and twice the rate of the high-dose patches, 19 to 25 percent. The authors say previous vaccination or exposure to measles and rubella created a higher baseline for comparison.

Other findings show patch vaccine recipients displayed at most mild to moderate adverse effects. And the vaccines in the devices were still considered usable even after transported for three days at 40 degrees C or 104 degrees F.

“Measles and rubella remain significant health concerns in many parts of the world,” says Vaxxas CEO David Hoey in a company statement released through BusinessWire, “and we look forward to moving this product forward to later stage clinical trials.” Among the later stage trials, says the company, is an early- and mid-stage study in The Gambia in west Africa among children not yet vaccinated for measles or rubella.

Vaxxas is following a similar strategy for its vaccine patch device as Micron Biomedical Inc., developer of a peel-and-stick patch for vaccines. Science & Enterprise reported in May 2023 on that company’s clinical trial in The Gambia showing its patch vaccine for measles and rubella generated comparable immune responses as conventional injections.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

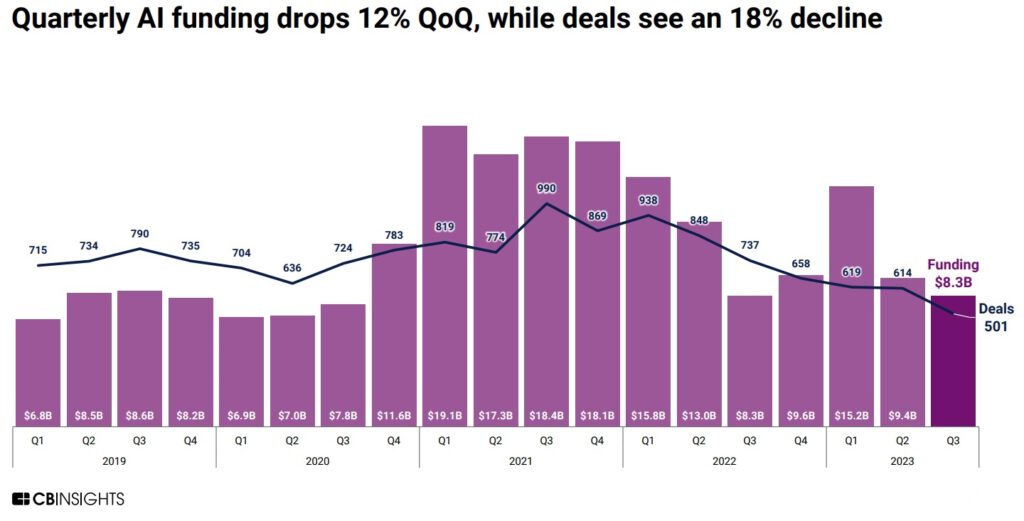

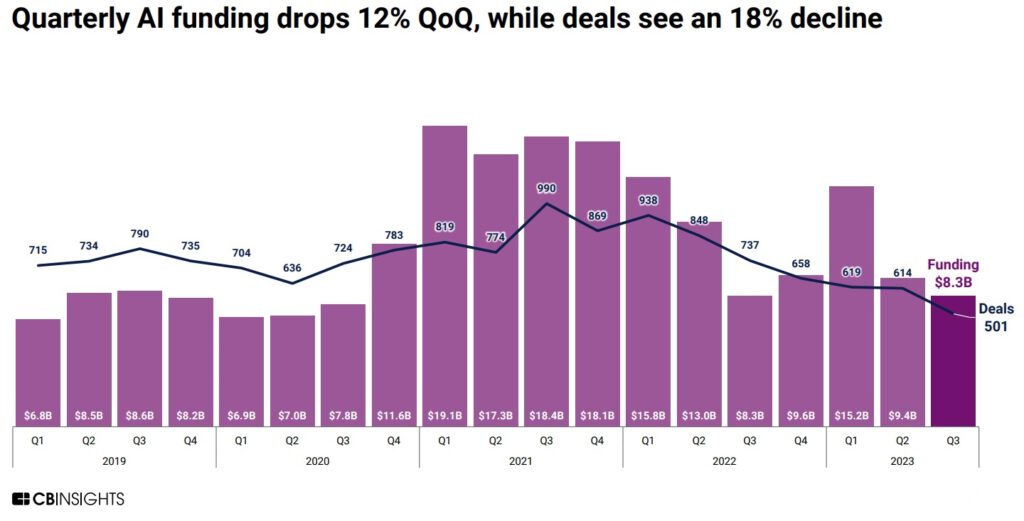

By Alan, on November 25th, 2023  Click on image for full-size view (CB Insights) 25 Nov. 2023. With all the headlines generated by artificial intelligence these days, one would expect investors would show as much enthusiasm for the technology. According to CB Insights, however, venture investments in A.I. are declining each quarter in 2023, with the number of venture deals reaching a low level not seen since 2017.

In its report issued earlier this month (registration required), technology intelligence company CB Insights says venture investments in A.I. worldwide declined to $8.3 billion in the third quarter of 2023, down from $9.4 billion in the second quarter and $$15.2 billion in Q1. In addition, the number of venture investment transactions in A.I. in the third quarter fell to 501, which CB Insights says is the fewest number of deals for a three-month period since 2017.

In an historical context, the CB Insights report looks less discouraging. The $8.3 billion raised by A.I. start-ups in Q3 2023 is comparable to quarterly investment totals worldwide in pre-pandemic 2019, with the average deal size rising in the third quarter to $26.3 million, from $19.6 million in Q2, and down slightly from $29.3 million in Q1. The median deal size — the half-way mark of smallest to largest transactions — so far this year, however, fell to $4 million by the third quarter, from $4.5 million in 2022 and $5.5 million in 2021.

CB Insights also notes that U.S.-based A.I. start-ups raised $5.8 billion, 70 percent of all venture dollars in the third quarter of 2023, in 236 of the 501 deals. Among the top A.I. investment recipients in Q3 was Generate:Biomedicines in Somerville, Mass. that raised $273 million in its third venture round during September. Earlier this month, Science & Enterprise reported on a demonstration of that company’s generative A.I. technology applied to design of therapeutic proteins, published in the journal Nature.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

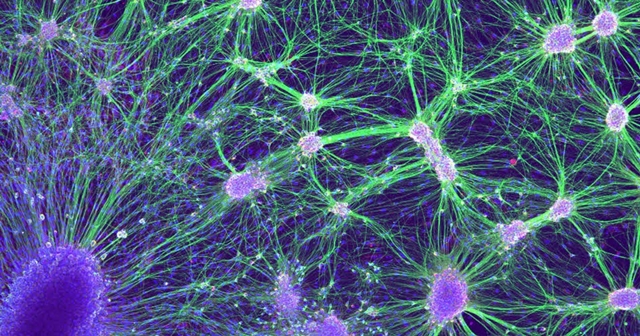

By Alan, on November 24th, 2023  Neurons (Laura Struzyna, University of Pennsylvania, NIH.gov) 24 Nov. 2023. Researchers from university labs in the U.K. are developing models of brain cell interactions using a company’s synthetic human cells derived from stem cells. The initiative brings together neuroscientists and cell biologists from the biotechnology company bit.bio — the name is spelled in all lower-case — in Cambridge, U.K. and the Institute of Psychiatry, Psychology and Neuroscience or IoPPN at Kings College London.

bit.bio is a six year-old business developing synthetic human cells for research and drug discovery, spun-off from labs at University of Cambridge. The company says its technology called opti-ox, short for optimized inducible overexpression, enables the design of human cells with specific properties and characteristics. The opti-ox process, says bit.bio, is similar to software programming, where the code in this case, is the cell’s genetics. The company says it alters the genome of induced pluripotent stem cells, so-called adult stem cells, with transcription factor proteins that regulate instructions delivered to cells with messenger RNA.

According to bit.bio, this process can be applied to a wide range of human cells, with its main product line called ioCells. One of the company’s applications is a line of synthetic cells for research derived from healthy human donors called ioWild Type Cells. Among the synthetic cells bit.bio offers in this line are neurons or signaling cells in the brain with genes expressing glutamate proteins regulating memory, cognition, and mood, and neurons with genes expressing gamma aminobutyric acid or GABA proteins that limit brain signals, associated with anxiety, stress, and fear. A third ioWild Type Cell are microglia cells that perform repair and maintenance functions in the brain.

Multi-cell models to reveal complex brain processes

Two neuroscientists at IoPPN plan to generate models of interactions among brain cells with ioCells. Deepak Srivastava is a molecular biologist and Anthony Vernon is a pharmacologist, both studying functions of neurons and microglia, including those derived from human pluripotent stem cells. The team expects to build models of brain cell interactions for revealing complex processes in the brain that cannot be demonstrated with single-cell models. For example, says bit.bio, impairment of one cell type may affect the functioning of other cells in the brain.

The company says Srivastava and Vernon already worked with its synthetic cells and are familiar with their properties. “It’s the unique properties of bit.bio’s ioCells,” notes Srivastava in a bit.bio statement, “that will enable us to create a consistent and scalable multi-cell model.” Srivastava adds, “These properties significantly reduce potential concerns that variability in experimental data could be due to variation in the cells in our models.”

Vernon cites schizophrenia as an example where complex brain cell models can better understand how treatments for the disease work. “Whilst antipsychotic medications can be effective,” says Vernon, “they do not address all symptoms of schizophrenia and a significant proportion of individuals show no therapeutic response to these agents. Moreover, they are associated with significant side effects.”

bit.bio says it expects the collaboration with King’s College to run for three years, with the resulting models released to the larger academic community.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

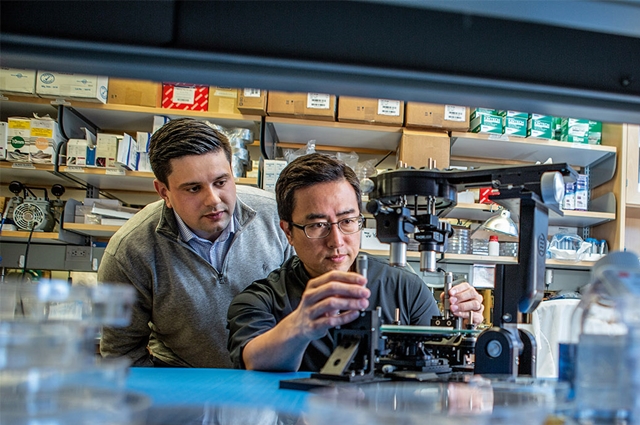

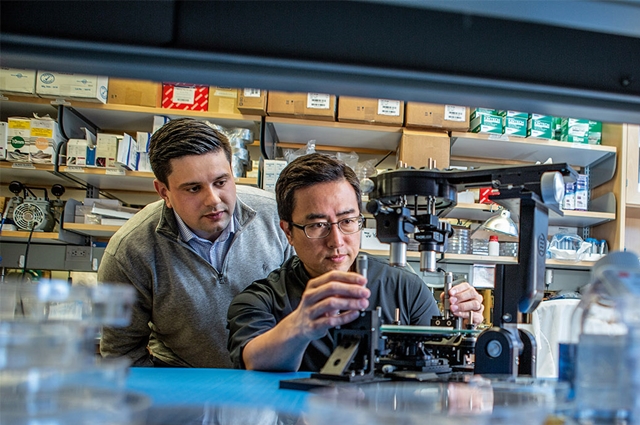

By Alan, on November 22nd, 2023  Andrei Georgescu, left, and Dan Huh in the lab at University of Pennsylvania in 2019. (Kevin Monko, Penn Today) 22 Nov. 2023. A company producing tissue samples in the lab, for drug discovery and preclinical research with robotics and artificial intelligence, raised $38 million in seed funding. Vivodyne, a three year-old biotechnology enterprise in Philadelphia, is based on research at University of Pennsylvania and Harvard University’s Wyss Institute.

Vivodyne aims to accelerate drug discovery and preclinical testing by creating life-like human tissues for assessing new treatments before clinical trials. Results of this testing, says the company, can also simulate and help design subsequent clinical trials. Vivodyne says its process creates human tissue, including blood vessels, as three-dimensional working samples called organoids on microfluidic chips that simulate organ functions. Organoids are cultured and produced from stem cells, then tested under varying conditions. The company says its organoid models now include bone marrow, small and large airways, liver, solid tumors, pancreas, intestine, placental barrier, uterus for placental implantation, skeletal muscle, and blood-brain barrier.

By using robotics, says Vivodyne, it can simultaneously test an experimental drug with human tissue organoids under various doses and multiple conditions on large numbers of samples. Data from responses of cells and tissue are captured in real time, including images. The company says it uses predictive machine learning models to generate likely responses of single cells and tissue to drug interventions, as well as effects on gene expression, transcription, and protein synthesis. These predictive models, says Vivodyne, can extend the analysis further to the context of known drug interactions, to simulate safety and efficacy outcomes in clinical trials.

“Improve the success rates of A.I.-generated drugs”

Vivodyne was begun in 2020 by biomedical engineering professor Dan Dongeun Huh at University of Pennsylvania and Andrei Georgescu who received his doctorate in Huh’s lab at UPenn. Georgescu and Huh are now the company’s CEO and chief scientist respectively. Huh joined the UPenn faculty in 2013, following work at Harvard’s Wyss Institute for Biologically Inspired Engineering, where he served in that group’s pioneering work with organs on chips. Science & Enterprise reported on a panel led by Huh discussing organ-chips at a scientific meeting in Feb. 2018.

“By combining the principles of organoids and organs-on-chips, we’ve created a new class of life-like, lab-grown human organs,” says Georgescu in a company statement released through Business Wire. Georgescu adds, “The result is huge amounts of complex human data, larger than you could get from any clinical trial, and we train multimodal models on this data to predict and improve the safety and efficacy of new drugs. This breakthrough not only enhances the predictive accuracy of A.I. in drug development, but also promises to significantly improve the success rates of A.I.-generated drugs, setting a new standard in the field.”

Vivodyne is raising $38 million in seed funds, led by technology start-up investor Khosla Ventures in Menlo Park, California. Taking part in the seed round are Kairos Ventures, CS Ventures, MBX Capital, and Bison Ventures. According to Crunchbase, Vivodyne raised $2 million in pre-seed financing in Mar. 2021 from Kairos Ventures. “Vivodyne’s technology,” says Khosla Ventures partner Alex Morgan, “bridges the gap between preclinical R&D and human clinical trials, while automating every step of the testing pipeline, from growing tissues, dosing, sampling and imaging, to analyzing data.”

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

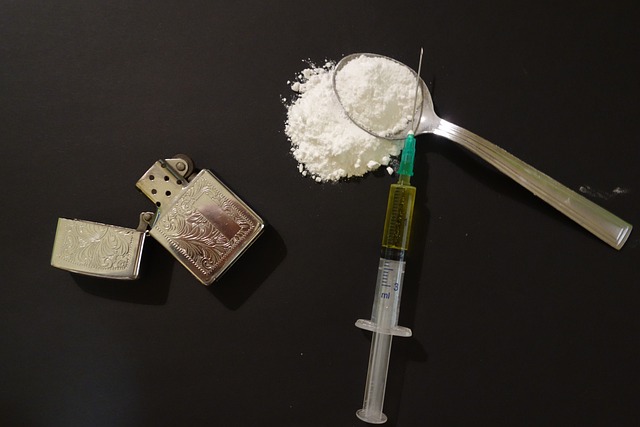

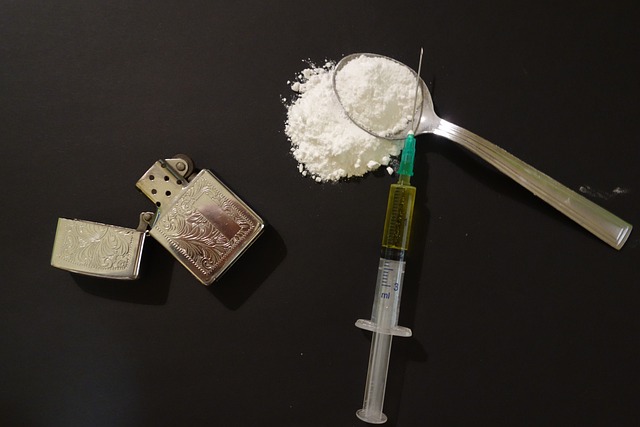

By Alan, on November 21st, 2023  (RenoBarenger, Pixabay. https://pixabay.com/photos/drugs-addict-addiction-problem-2793133/) 21 Nov. 2023. A challenge competition with a $50 million purse seeks new strategies to attack the global substance abuse epidemic, including personalized diagnostics and treatments. Wellcome Leap, an organization in Los Angeles that conducts crowdsourced competitions to rapidly advance the science of health care worldwide is sponsoring the challenge, with an initial deadline for abstracts of 21 Dec. 2023.

The Untangling Addiction challenge, says Wellcome Leap, aims to break a continuing cycle of addiction to alcohol and drugs, leading to early deaths and despair, and affecting every part of the world. The organization cites data showing the number of people worldwide with substance abuse rose by 45 percent between 2009 and 2019. In the U.S., 29.5 million people age 12 and over met the criteria for alcohol addiction, while 24 million are abusing drugs. But all regions, says the group, are struggling with high numbers of people with alcohol and drug addictions.

Compounding the problem, says Wellcome Leap, few current strategies for preventing and treating addictions seem to be working. Only a fraction of the population needing help is receiving treatment, and most addiction treatments follow the same basic approach for each recipient, without considering individual biological or neurological factors. And few effective programs prevent relapses that occur within 90 days among more than half of those treated. In addition, says the organization, new sources of addiction have emerged from abuse of prescription opioid pain killers, serving as gateway drugs for heroin and dangerous synthetic opioids like fentanyl.

Seeking new personalized treatments for addiction

The Wellcome Leap challenge calls for better methods to measure and quantify an individual’s susceptibility for addiction to different substances, particularly opioids, as well as risks of relapse. And the competition seeks new personalized treatments for addiction that allow for quantitative assessments, to reduce relapse and boost abstinence rates. The organization divides the program into five major topics:

- Quantitative addiction measures and models based on biomarkers and neurological indicators

- Demonstration and validation of neurological biomarkers for alcohol and drug abuse, particularly those accessible by simple and non-invasive means

- Long-term studies of opioid addiction risk, abuse, and prevention strategies

- Retrospective studies of biological and health datasets to reveal biomarkers associated with alcohol and drug abuse

- New treatments for alcohol and drug abuse, including medications and devices, as well as therapies targeting relapse, with the goal of doubling current efficacy rates

Wellcome Leap is seeking proposals that address one or more of the five topics from individuals, small teams, or coalitions worldwide. The challenge is open to researchers affiliated with universities, research institutes, companies, not-for-profit organizations, and government labs. Initial seven-page abstracts are due by 21 Dec. 2023, with feedback as well as invitation to submit a full proposal returned by 5 Jan. 2024. If invited, full proposals are due by 5 Feb. 2024, with selections for funding made by 6 Mar. 2024.

The Untangling Addiction challenge has a total prize purse of $50 million. However, Wellcome Leap says there are no pre-set award numbers or amounts, with funding determined by the specific proposals. Individuals or organizations not yet registered with Wellcome Leap will need to complete the group’s master funding agreement to receive an award.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

By Alan, on November 20th, 2023  (NIH.gov) 20 Nov. 2023. Data from three health repositories show a simple blood test reveals inherited and acquired genetic indicators, analyzed with artificial intelligence, to detect coronary heart disease. A team from the biotechnology company Cardio Diagnostics Holdings Inc. in Chicago describe their findings from population health data sets and technology in today’s issue of the Journal of the American Heart Association.

Cardio Diagnostics is a six year-old company that seeks to provide simpler and more accessible diagnostics for coronary heart disease, the most common form of heart disease, responsible for more than 375,000 deaths in the U.S. in 2021. Coronary heart disease results from a build up of cholesterol plaques in the large arteries supplying blood to the heart, which can narrow and then block blood flow to the heart. The disorder, also called coronary artery disease, is a major risk factor for heart attacks and heart failure.

The authors note, however, that most of today’s diagnostic tools for coronary heart disease are invasive, expensive, or not sensitive. Exercise stress tests with electrocardiograms or ECGs are non-invasive, but can cost more than $1,100, say the authors, yet return a true-positive sensitivity rate of only 58 percent. An angiogram has a sensitivity rate of 97 percent, but requires an invasive catheter, with contrast agency chemicals and X-rays that can be harmful to some patients. In addition, say the authors, an angiogram can cost more than $9,200.

Hydrocarbon chemicals influencing gene functions and expression

The Cardio Diagnostics solution is a simple blood draw tested for coronary heart disease and analyzed for biomarkers, molecular indicators from inherited genetics and epigenetics, chemical changes in DNA since birth, usually from lifestyle or environmental exposures. The paper’s authors note that epigenetics influenced by smoking or diets high in saturated fats often contribute more to heart disease than inherited genetics. The company’s test, called PrecisionCHD, analyzes blood samples with algorithms to identify inherited and epigenetic DNA contributing to coronary heart disease, particularly methylation signatures: addition of hydrocarbon chemicals that influence gene functions and expression.

Cardio Diagnostics trained the PrecisionCHD machine learning algorithms with data from the Framingham Heart Study, a pioneering long-term assessment of cardiovascular health among residents of Framingham, Mass. across multiple generations. The authors say the algorithms are based on data from 1,545 subjects in the Framingham study using genetic, methylation, and demographic data that accurately detects coronary heart disease in 78 percent of cases, with true-positive sensitivity of 75 percent, and true-negative specificity of 80 percent.

The new paper seeks to validate the PrecisionCHD algorithms by extending the analysis to two independent population health collections — from the Intermountain Health system in Salt Lake City serving Utah and adjoining states, and University of Iowa Hospitals and Clinics in Iowa City — as well as a separate sample from the Framingham Heart Study. Records and blood samples from the three repositories were randomized between those diagnosed with coronary heart disease, and those without the condition, resulting in 449 cases of people with coronary heart disease and 2,067 as the control group. The results show a PrecisionCHD test accuracy of 82 percent, with 79 percent sensitivity, and 76 percent specificity.

Robert Philibert, lead author and chief medical officer of Cardio Diagnostics, calls the findings “a game changer for personalized cardiovascular care” in a company statement, and adds the approach can “provide a more comprehensive, personalized snapshot of a patient’s CHD drivers, allowing for early intervention and tailored treatment plans.” Philbert founded Cardio Diagnostics with Meesha Dogan, a co-author and company CEO, who notes the technology can particularly help patients “in rural areas where advanced diagnostic tools are scarce and cardiovascular specialists are even more rare.” Philibert and Dogan received their doctorates in medicine and biomedical engineering respectively at University of Iowa.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

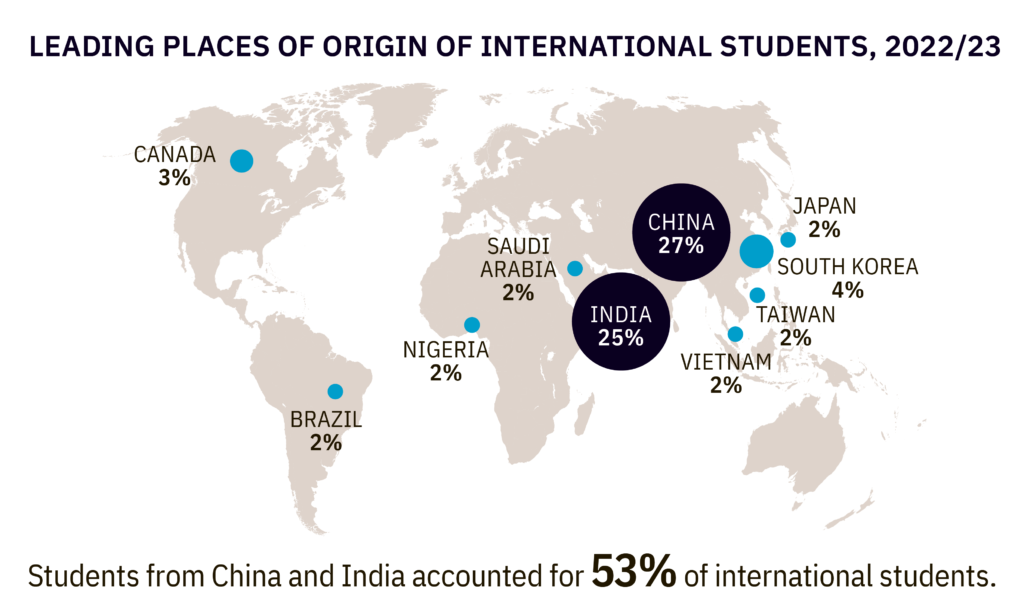

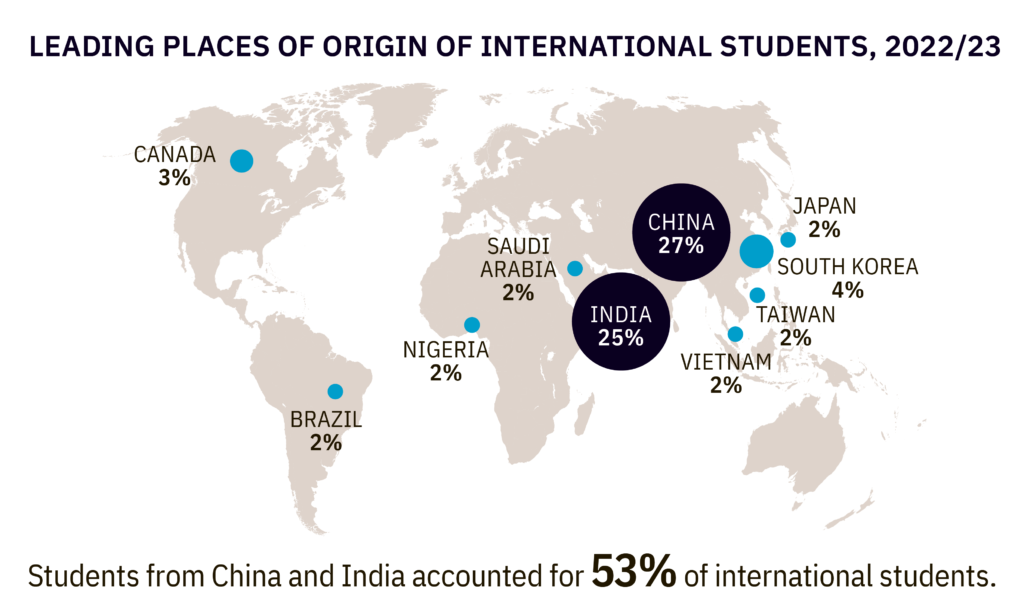

By Alan, on November 18th, 2023  Click on image for full-size view (Open Doors report, Institute of International Education) 18 Nov. 2023. China and India provided more than half of all international students at U.S. universities in the last academic year, as total enrollment from overseas approached pre-pandemic levels. Institute of International Education in New York this week issued its annual Open Doors report on international students showing more than 1 million students on U.S. campuses in 2022-23.

Some 1.06 million international students enrolled at U.S. colleges and universities in the last academic year, according to the report, an increase of 11.5 percent over the previous year, and just shy of the all-time total of 1.1 million in 2018-19. Of that total, nearly 290,000 came from China with another 269,000 from India, accounting for 27 and 25 percent respectively. Those numbers far eclipsed the 44,000 students from South Korea, and 22,000 to 28,000 each from Canada, Vietnam, and Taiwan. The total number of students from China declined slightly (by 0.2 percent) from 2021-22, but the number of Indian students jumped by 35 percent, the largest increase for any one country.

Some 467,000 students pursued graduate or professional degrees last year, about 44 percent of the total, an increase of 21 percent from the previous year, and the largest number of graduate/professional students ever recorded in the Open Doors data. Undergraduate enrollments from overseas rose at a slower pace (1 percent), but still the first increase in five years. More than half (55%) of international students pursued degrees in science or technology-related disciplines, with nearly a quarter (23 percent) taking computer science and mathematics, and 19 percent enrolled in engineering degrees.

Twelve universities each enrolled 10,000 or more international students in 2022-23, with New York University accounting for 24,500, Northeastern University in Boston taking in nearly 21,000, and Columbia University enrolling 19,000. California and New York universities enrolled more than 125,000 international students in each state last year.

More from Science & Enterprise:

We designed Science & Enterprise for busy readers including investors, researchers, entrepreneurs, and students. Except for a narrow cookies and privacy strip for first-time visitors, we have no pop-ups blocking the entire page, nor distracting animated GIF graphics. If you want to subscribe for daily email alerts, you can do that here, or find the link in the upper left-hand corner of the desktop page. The site is free, with no paywall. But, of course, donations are gratefully accepted.

* * *

|

Welcome to Science & Enterprise Science and Enterprise is an online news service begun in 2010, created for researchers and business people interested in taking scientific knowledge to the marketplace.

On the site’s posts published six days a week, you find research discoveries destined to become new products and services, as well as news about finance, intellectual property, regulations, and employment.

|

RSS - Posts

RSS - Posts

You must be logged in to post a comment.